Uni codes and Japanese characters

Incompatible encodings complicate data migration from different systems

Different encodings are a major source of problems during data migration. These include character codes such as UTF-8 and ASCII, as well as different alphabets such as those used in Japanese. If data records from different systems are merged, they must therefore be cleansed and recoded before migration. The data quality tools from Stuttgart-based software specialist TOLERANT Software can provide support here, as they can read and analyse texts from different writing systems.

Data can be encoded in different character codes. A common international character code is the 8-bit encoded Uni-Code UTF-8, in which a specific code is defined for each text element of all known writing cultures and character systems. The total number of characters defined in UTF-8 is 28 = 256 characters. A predecessor and still widely used character code is the 7-bit coded ASCII code, which can represent 27 = 128 characters. Problems occur when UTF-8 encoded data records are integrated into an ASCII-encoded database. The ASCII code cannot read the integrated data records and therefore cannot recognise duplicates.

Recorded twice?

Duplicates are data records that appear twice in a database. Duplicate recognition is an essential component that must be taken into account when merging data, as this is the only way to prevent data quality problems such as the duplicate entry of customers. Duplicate customers who receive an invoice more than once, for example, are otherwise quickly annoyed and threaten to churn. To prevent this, care should be taken before data migration to ensure that the data encodings are compatible. Otherwise, they must be recoded before migration.

How data is prepared

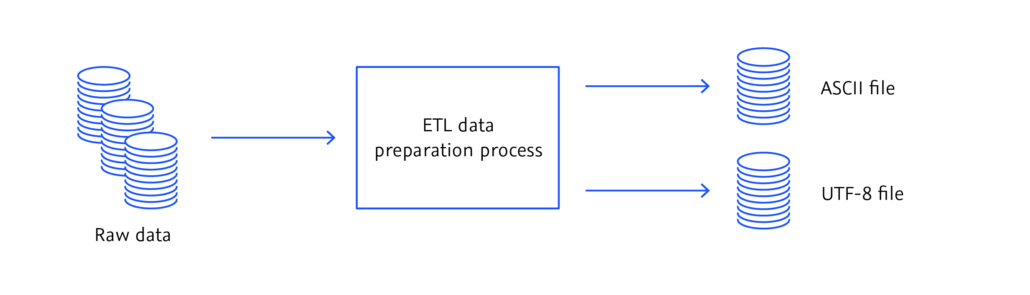

In an ETL process, the raw data is prepared before migration and converted into either an ASCII file or a UTF file. The abbreviation ETL refers to the three data migration processes extraction (E), transformation (T) and loading (L), which are usually run through when data is transferred from one system to another. ETL processes are batch-orientated, i.e. they only run in a predefined period of time and prepare a limited amount of data records. The data up-to-dateness of ETL processes is therefore limited.

During the preparation process, the data is extracted (E), transformed (L) and loaded (L). Illustration: © TOLERANT Software

Japanese duplicates

However, duplicates are not only caused by different encodings; they can also be culturally determined. In Japanese, for example, there are three alphabets and all three are used in combination in Japanese texts. Words of Chinese origin are usually written in the Kanji symbol alphabet, grammatical words such as prepositions or verb endings are written in the Hiragana alphabet and the Japanese use the Katakana alphabet for foreign words from English. As the pronunciation of kanji characters is not always clear even for native speakers, names written in kanji are often also transcribed into the hiragana or katakana syllable alphabets. Similar to the Uni-Code, duplicates occur in Japanese data records when transcribed names are not assigned to their Kanji equivalents. Using three examples, we present the challenges that the Japanese language poses for data migration and how duplicates can arise.

Five spellings for one and the same name

One example is the name 髙橋 眞國 (Takahashi Makuni). In this example, the first kanji character 髙 is written in a traditional kanji variant, such as that used in the family register. Depending on the machine, the kanji character can also be written in a slightly different way 高. There is also a modern equivalent for the traditional kanji characters 眞國 in the second part of the name, which looks like this 真国. In the Katakana syllable alphabet, the complete name would be transcribed as タカハシ マサクニ, and in the Hiragana syllable alphabet it would look like soたかはし まくに. During data migration, it is now important to link the 5 different data sets of the same name (i.e. traditional kanji, modern kanji, katakana, hiragana and finally the variant written in Latin letters) in such a way that they can always be found and correctly assigned, regardless of the data input. The consequence of a missing link would be five duplicates.

Error-tolerant duplicate recognition

Another challenge posed by Japanese script is fuzzy or error-tolerant duplicate recognition. A search is fuzzy or error-tolerant if duplicates are found even in the case of incorrect data entry (e.g. misspelled letters, typing errors or minimal spelling differences). In addition to three different alphabets, the Japanese script also distinguishes between upper and lower case letters. When the hiragana sounds よ(yo)、や (ya) andゆ (yu) as well as the katakana soundsヨ (yo)、ヤ (ya) and ユ(yu) merge with a consonant, they are written in a slightly smaller font size. An example is the name 中島 京子 (Nakashima Kyoko), which is transcribed in Hiragana as なかしま きょこ and in Katakana as ナカシマ キョウコ. The characters ょ and ョ follow きand キ respectively and are therefore written slightly smaller. If these characters are written in capital letters, the name from the example above would be transcribed incorrectly as “Nakashima Kiyoko”. The challenge when migrating Japanese data records is therefore to recognise duplicates even with slight spelling differences (such as upper and lower case) and still assign them to the correct kanji characters.

Different kanji readings

Finally, the different readings of kanji characters are particularly challenging for Japanese duplicate recognition. For example, the name 東海林太郎 can be read and pronounced either as Shouji Tarō or as Taorin Tarō. In order to successfully master the task of data migration, data quality tools must be able to identify the katakana transcription ショウジ タロウ (Shouji Tarō) and the variant トウカイリン タロウ(Taorin Tarō) as different readings of the same kanji character and recognise them as duplicates.

Conclusion

Problems with data migration can be avoided by checking data records from different databases before merging them. Incompatible character codes must be cleaned up using ETL processes before data migration and recoded if necessary. Data quality tools that can handle and read all three Japanese writing systems help to avoid errors during data migration. The data quality specialist TOLERANT Software specialises in different writing systems and encodings in many countries and cultures. The data quality tools from TOLERANT Software eliminate the language-related, specific problems of incompatible encodings in many different countries and cultures.